Article by Eric Worrell

“…Google adds machine learning to climate models for ‘faster forecasts’…”

The secret to better weather forecasts may be artificial intelligence

Google adds machine learning to climate models to 'predict faster'

Tobias Mann

Saturday, July 27, 2024 // 13:27 UTC…

In a paper published this week in the journal Nature, a team from Google and the European Center for Medium-Range Weather Forecasts (ECMWF) details a novel approach that uses machine learning to overcome the limitations of existing climate models nature and try to produce forecasts faster and more accurately.

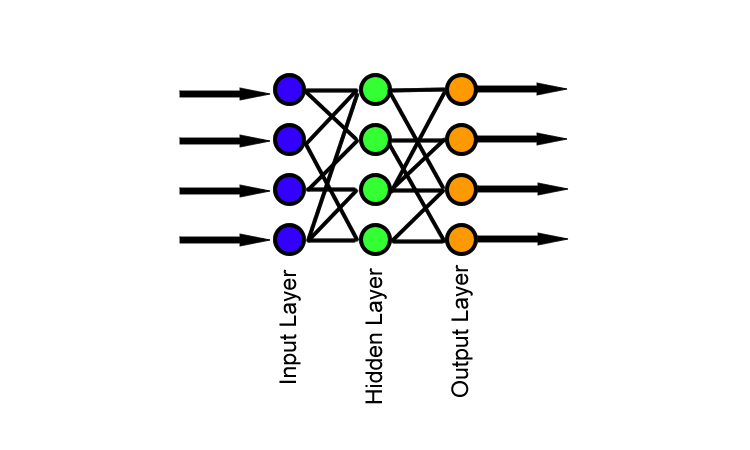

The model, called NeuralGCM, was developed using historical weather data collected by ECMWF and uses neural networks to enhance more traditional HPC-style physics simulations.

As Stephan Hoyer, one of the team members behind NeuralGCM, wrote in a recent report, most climate models today work by dividing the Earth into cubes 50-100 kilometers on each side and then simulating air and air according to known laws. The way moisture moves through it is predicted.

NeuralGCM operates in a similar way, but with additional machine learning used to track climate processes that are not necessarily well understood or occur at smaller scales.

…

Learn more: https://www.theregister.com/2024/07/27/google_ai_weather/

Research summary;

Neural general circulation model of weather and climate

Dmitry Kochkov, Gianni Yuval, Ian Langmore, Peter Norgaard, Jamie Smith, Griffin Morse, Milan Kroll, James Lowe Tes, Stephen Rasp, Peter Duben, Sam Hatfield, Peter Battaglia, Alvaro Sanchez-Gonzalez, Matthew Wilson, Michael P. Renner and Stephen Hoyer

Abstract

General circulation models (GCMs) are the basis for weather and climate predictions1,2. GCMs are physics-based simulators that combine numerical solvers of large-scale dynamics with tuned representations of small-scale processes such as cloud formation. Recently, machine learning models trained on reanalysis data have achieved comparable or better skill to GCMs in deterministic weather forecasting3,4. However, these models do not demonstrate improved ensemble forecasts nor sufficient stability for long-term weather and climate simulations. Here we propose a GCM that combines a differentiable solver for atmospheric dynamics with a machine learning component and show that it can produce forecasts of deterministic weather, ensemble weather and climate, with the best machine learning and Physics-based methods are comparable. NeuralGCM is competitive with machine learning models for 1 to 10 day forecasts and with the European Center for Medium Range Weather Forecasts ensemble forecasts for 1 to 15 day forecasts. Using prescribed sea surface temperatures, NeuralGCM can accurately track climate indicators for decades and produce climate forecasts at 140 km resolution, showing the actual frequency and trajectory of tropical cyclones and other emergent phenomena. For both weather and climate, our approach saves orders of magnitude computational effort over traditional GCMs, although our model does not infer significantly different future climates. Our results demonstrate that end-to-end deep learning is compatible with tasks performed by traditional GCMs and can enhance large-scale physics simulations that are critical for understanding and predicting Earth systems.

Learn more: https://www.nature.com/articles/s41586-024-07744-y

After reading the main studies, they seem to claim that adding neural network magic can produce better short-term weather forecasts and climate predictions.

The researchers attempted to test their model by retaining some training data and using the trained neural network to generate weather forecasts based on real-world data that artificial intelligence has never seen. They also discuss how their model predictions diverge from reality after 3 days – “At longer lead times, RMSE increases rapidly due to chaotic divergence of nearby weather tracks, making RMSE less informative for deterministic models”, But claims that their approach still performs better than traditional methods come close once messy disagreements are taken into account.

I'm a bit skeptical about trusting the predictive power of neural network black boxes. History is littered with cases where scientists followed all the steps the authors described, only to discover that neural networks behaved very differently than expected on demonstration day. I would have preferred they put more effort into reverse engineering their neural network, teasing out what (if anything) it actually discovered, and seeing if it discovered new atmospheric physics that could be used to create Better deterministic white-box models.

In 2018, Amazon suffered a major embarrassment when one of their neural networks failed. They tried using neural networks to filter technical candidates, but they found that the neural networks showed bias against female candidates. The neural network noticed that most candidates were male and reasoned that it should discard applications from female candidates based on their gender.

Anyone who thinks Amazon’s neural network gender bias has any basis needs to check out an Asian tech store. Somehow, in the West, we raise our girls to believe that they are not cut out for tech jobs. The few women who were able to filter through the filter of Western culture, such as Margaret Hamilton, who led the Apollo guidance computer programming team, more than demonstrated their abilities. Hamilton is credited with coining the term “software engineering.”

Neural networks are very useful because they can identify relationships that are not obvious. But this ability to see non-obvious patterns in data comes with a serious risk of false positives, that is, seeing patterns that don’t exist. Especially when analyzing physical phenomena, once you have that magical black box neural network that purportedly exhibits superior skills, if you don't try to reverse-engineer its inner workings, you have no idea what you're seeing is real. The skill is still a subtle skill false positive.

related