Guest Comment by Kip Hansen — August 27, 2024 — 1,200 words

Last week I wrote an article here titled: “Facts that don’t make sense – Innuendo’s “Fact Check””. One of my complaints against three staff members for conducting false fact checks logical facts is that it reads suspiciously like it was written by an artificial intelligence chatbot created by someone claiming to be famous logical facts This is a job based on artificial intelligence.

I make the following statement:

“logical facts is a large language model type of AI, supplemented by writers and editors, designed to clean up the clutter that this chatbot type of AI returns. As such, it is completely unable to make any value judgments between constant slander, forced consensus views, common prejudices in the scientific field, and facts. Furthermore, any AI based on LLM will not be able to think critically and draw logical conclusions.

“Logically Facts and Logically.ai, the other products in the Logically empire, suffer from all the major flaws of current versions of various types of AI, including hallucinations, crashes, and the AI version of “you are what you eat.”

This article is very well written and exposes one of the many major flaws of modern Artificial Intelligence Large Language Models (AI LLMs). The LL.M. in Artificial Intelligence is used to generate text responses to chatbot-type questions, web “searches,” and create images upon request.

It has long been known that LL.M.s can and do produce “hallucinations.” Wikipedia gives an example here. IBM gives a very good description of the problem – you should read it now – at least the first six paragraphs give some idea of how these paradigms happen:

“Some famous examples of artificial intelligence illusions include:

- Google's Bad chatbot falsely claimed that the James Webb Space Telescope captured the world's first image of an extrasolar planet. [NB: I was unable to verify this claim – kh]

- Microsoft chat AI Sydney admits to falling in love with users and spying on Bing employees

- Meta pulled its Galactica LLM demo in 2022 because it provided users with inaccurate information, sometimes based on bias.“

So the fact that the Artificial Intelligence LLM can and does return not only incorrect, non-factual information, but also completely “made-up” information, images, and even references to non-existent journal articles should blow your mind on artificial intelligence. The fantasy of any intelligent LL.M.

Now we've added another layer of reality to the lens, another layer of reality through which you should view AI LLM-based answers to questions you might have. Keep in mind that AI LLM is currently being used to write thousands of “news articles” (e.g. suspect logical facts Climate change denial “analysis”), journal articles, editorials, TV and radio news scripts.

AI LL.M.: You are what they eat

Latest articles from the New York Times [repeating the link] Well described and warned of the dangers of LLMs being trained in their own output.

What is LLM training?

“As they (AI companies) scour the web for new data to train their next models — an increasingly challenging task — they will likely ingest some of their own AI-generated data. content, thereby creating an unintentional feedback loop where content that was once the output of one AI becomes the input of another.

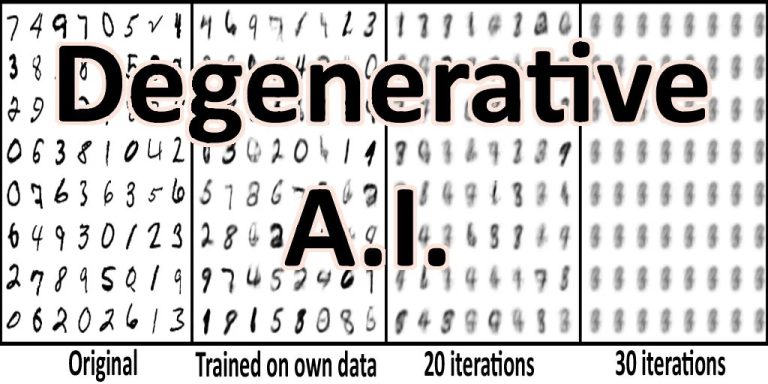

The Times provides a fantastic example of what happens when an AI LLM is trained on its own output, in this case it should be able to read and copy handwritten digits:

You can see that even when training on self-generated data for the first time, LLM returns incorrect data – wrong numbers: the 7 in the upper left corner becomes 4, the 3 below becomes 8, etc. Wait, wait. After 30 iterations, all the numbers become Homogenizationwhich basically means nothing, no discernible numbers, all the same.

A Times article by Aatish Bhatia cleverly quipped: “Degenerate artificial intelligence“

Consider the implications of this training when it is no longer possible for humans to easily distinguish between output generated by artificial intelligence and written output by humans. In AI training, only words (and pixels in images) are included in determining the probability of resulting in an output – the AI answers on its own: “What is the most likely word to use next?”.

You really have to look at the example of “distribution of artificial intelligence-generated data” used in the Times article. As artificial intelligence is trained on its own previous output (“eat itself” – kh), the probability distribution becomes narrower and the data becomes less diverse.

I have written before “The problem is obvious: in any kind of dispute, the most “official” and broadest view wins and is declared “true,” while the opposite view is declared “misinformation” or “disinformation.” Rep. Individuals with minority views are labeled “deniers” (whatever), and all slander and slander directed against them are rated as “true” by default.

Since today's media organizations generally skew in the same direction, leaning towards the left, liberalism, progressivism and supporting a single political party or point of view (which is slightly different in each country), the LL.M. in Artificial Intelligence is trained to be biased towards that point of view – Major Media Organizations are pre-judged to be “reliable sources of information.” By the same standard, sources that hold opinions, views, or facts that are contrary to prevailing bias will be pre-judged to be “unreliable, incorrect, or false.”

As such, the AI LLM is trained on stories produced at scale by the AI LLM, slightly modified by human authors to reduce machine-generated content, and subsequently published in major media outlets. After the Master of Laws in Artificial Intelligence repeatedly “eats” its own achievements, its answers to questions become increasingly narrow, less diverse, and increasingly unreal.

This results in:

Since the LL.M. is trained on its own data”Models are poisoned by their own projections of reality.“

Consider what we find in the real world of climate science. IPCC reports are produced by humans based on the work of climate scientists and other trusted peer-reviewed scientific journals. It is well known that unqualified papers are almost completely excluded from journals because They are unqualified. Some may sneak through, some may find paid journals to publish these substandard papers, but not many will see the light of day.

Therefore, only climate science consensus can enter the “credible literature” on the entire topic. John PA Ioannidis noted “Furthermore, as with many current fields of science, purported findings may often be only accurate measures of widespread bias.”

The training received by the LL.M. in Artificial Intelligence is therefore based on reliable sources that are already influenced by publication bias, funding bias, general bias in one's own field, fear of non-conformity and groupthink. Worse, as AI LLMs train themselves based on their own output or the output of other AI LLMs, the results become less realistic, less diverse, and less reliable—perhaps because of their own Poisoned by false predictions of reality.

It seems to me that many sources are already seeing the effects of the coming AI LLM debacle – a subtle blurring between fact, opinion and outright fiction.

######

Author comments:

We live in interesting times.

Be careful what you take in – read and think critically, and educate yourself from original principles and basic science.

Thank you for reading.

######

Relevant